Agentic Apps

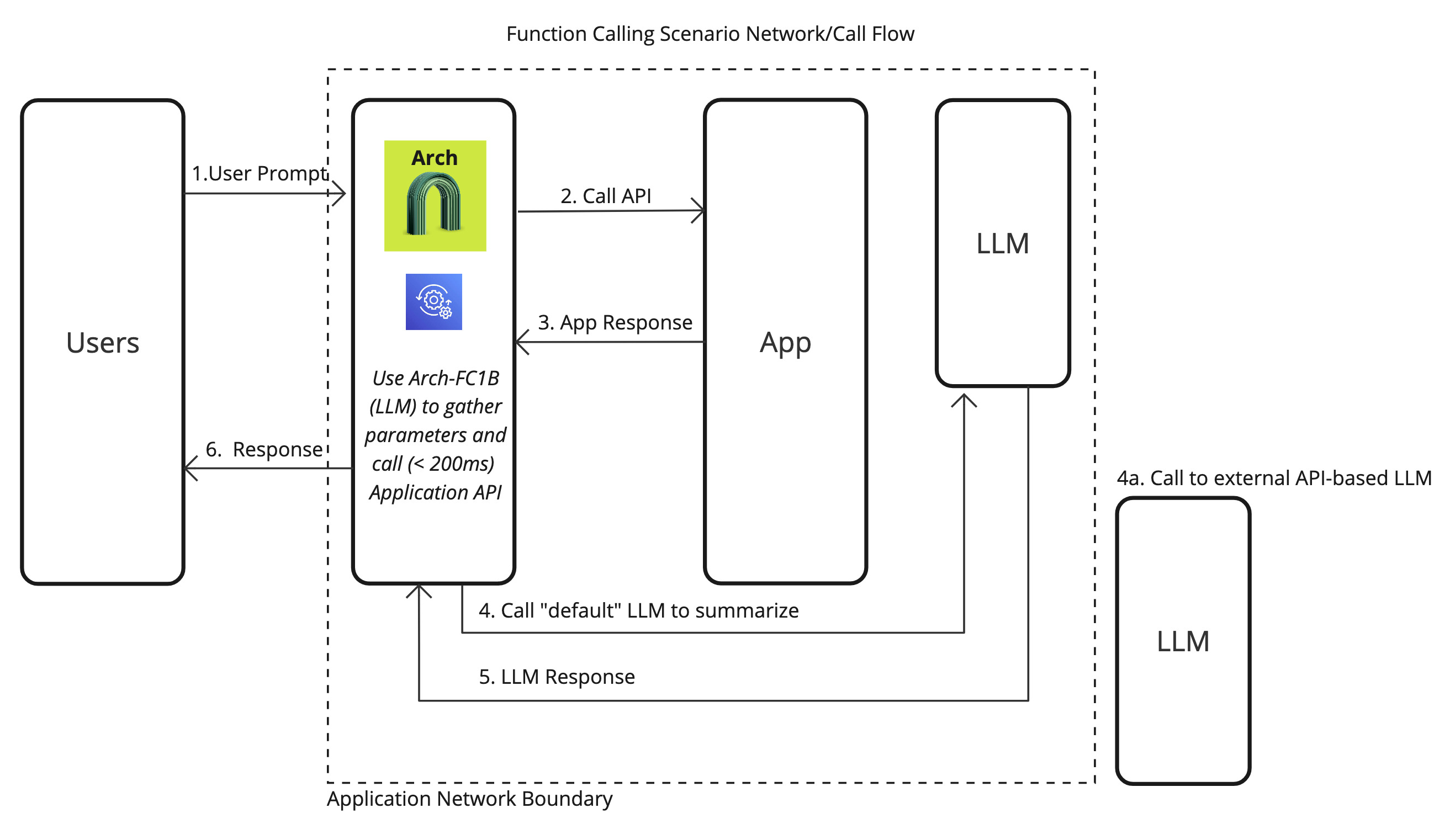

Arch helps you build personalized agentic applications by calling application-specific (API) functions via user prompts. This involves any predefined functions or APIs you want to expose to users to perform tasks, gather information, or manipulate data. This capability is generally referred to as function calling, where you can support “agentic” apps tailored to specific use cases - from updating insurance claims to creating ad campaigns - via prompts.

Arch analyzes prompts, extracts critical information from prompts, engages in lightweight conversation with the user to gather any missing parameters and makes API calls so that you can focus on writing business logic. Arch does this via its purpose-built Arch-Function - the fastest (200ms p50 - 12x faser than GPT-4o) and cheapest (44x than GPT-4o) function calling LLM that matches or outperforms frontier LLMs.

Single Function Call

In the most common scenario, users will request a single action via prompts, and Arch efficiently processes the request by extracting relevant parameters, validating the input, and calling the designated function or API. Here is how you would go about enabling this scenario with Arch:

Step 1: Define Prompt Targets

1version: v0.1

2listener:

3 address: 127.0.0.1

4 port: 8080 #If you configure port 443, you'll need to update the listener with tls_certificates

5 message_format: huggingface

6

7# Centralized way to manage LLMs, manage keys, retry logic, failover and limits in a central way

8llm_providers:

9 - name: OpenAI

10 provider: openai

11 access_key: $OPENAI_API_KEY

12 model: gpt-3.5-turbo

13 default: true

14

15# default system prompt used by all prompt targets

16system_prompt: |

17 You are a network assistant that just offers facts; not advice on manufacturers or purchasing decisions.

18

19prompt_targets:

20 - name: network_qa

21 endpoint:

22 name: app_server

23 path: /agent/network_summary

24 description: Handle general Q/A related to networking.

25 default: true

26 - name: reboot_devices

27 description: Reboot specific devices or device groups

28 endpoint:

29 name: app_server

30 path: /agent/device_reboot

31 parameters:

32 - name: device_ids

33 type: list

34 description: A list of device identifiers (IDs) to reboot.

35 required: true

36 - name: device_summary

37 description: Retrieve statistics for specific devices within a time range

38 endpoint:

39 name: app_server

40 path: /agent/device_summary

41 parameters:

42 - name: device_ids

43 type: list

44 description: A list of device identifiers (IDs) to retrieve statistics for.

45 required: true # device_ids are required to get device statistics

46 - name: time_range

47 type: int

48 description: Time range in days for which to gather device statistics. Defaults to 7.

49 default: 7

50

51# Arch creates a round-robin load balancing between different endpoints, managed via the cluster subsystem.

52endpoints:

53 app_server:

54 # value could be ip address or a hostname with port

55 # this could also be a list of endpoints for load balancing

56 # for example endpoint: [ ip1:port, ip2:port ]

57 endpoint: host.docker.internal:18083

58 # max time to wait for a connection to be established

59 connect_timeout: 0.005s

Step 2: Process Request Parameters

Once the prompt targets are configured as above, handling those parameters is

1from flask import Flask, request, jsonify

2

3app = Flask(__name__)

4

5

6@app.route("/agent/device_summary", methods=["POST"])

7def get_device_summary():

8 """

9 Endpoint to retrieve device statistics based on device IDs and an optional time range.

10 """

11 data = request.get_json()

12

13 # Validate 'device_ids' parameter

14 device_ids = data.get("device_ids")

15 if not device_ids or not isinstance(device_ids, list):

16 return (

17 jsonify({"error": "'device_ids' parameter is required and must be a list"}),

18 400,

19 )

20

21 # Validate 'time_range' parameter (optional, defaults to 7)

22 time_range = data.get("time_range", 7)

23 if not isinstance(time_range, int):

24 return jsonify({"error": "'time_range' must be an integer"}), 400

25

26 # Simulate retrieving statistics for the given device IDs and time range

27 # In a real application, you would query your database or external service here

28 statistics = []

29 for device_id in device_ids:

30 # Placeholder for actual data retrieval

31 stats = {

32 "device_id": device_id,

33 "time_range": f"Last {time_range} days",

34 "data": f"Statistics data for device {device_id} over the last {time_range} days.",

35 }

36 statistics.append(stats)

37

38 response = {"statistics": statistics}

39

40 return jsonify(response), 200

41

42

43if __name__ == "__main__":

44 app.run(debug=True)

Parallel & Multiple Function Calling

In more complex use cases, users may request multiple actions or need multiple APIs/functions to be called simultaneously or sequentially. With Arch, you can handle these scenarios efficiently using parallel or multiple function calling. This allows your application to engage in a broader range of interactions, such as updating different datasets, triggering events across systems, or collecting results from multiple services in one prompt.

Arch-FC1B is built to manage these parallel tasks efficiently, ensuring low latency and high throughput, even when multiple functions are invoked. It provides two mechanisms to handle these cases:

Step 1: Define Prompt Targets

When enabling multiple function calling, define the prompt targets in a way that supports multiple functions or API calls based on the user’s prompt. These targets can be triggered in parallel or sequentially, depending on the user’s intent.

Example of Multiple Prompt Targets in YAML:

1version: v0.1

2listener:

3 address: 127.0.0.1

4 port: 8080 #If you configure port 443, you'll need to update the listener with tls_certificates

5 message_format: huggingface

6

7# Centralized way to manage LLMs, manage keys, retry logic, failover and limits in a central way

8llm_providers:

9 - name: OpenAI

10 provider: openai

11 access_key: $OPENAI_API_KEY

12 model: gpt-3.5-turbo

13 default: true

14

15# default system prompt used by all prompt targets

16system_prompt: |

17 You are a network assistant that just offers facts; not advice on manufacturers or purchasing decisions.

18

19prompt_targets:

20 - name: network_qa

21 endpoint:

22 name: app_server

23 path: /agent/network_summary

24 description: Handle general Q/A related to networking.

25 default: true

26 - name: reboot_devices

27 description: Reboot specific devices or device groups

28 endpoint:

29 name: app_server

30 path: /agent/device_reboot

31 parameters:

32 - name: device_ids

33 type: list

34 description: A list of device identifiers (IDs) to reboot.

35 required: true

36 - name: device_summary

37 description: Retrieve statistics for specific devices within a time range

38 endpoint:

39 name: app_server

40 path: /agent/device_summary

41 parameters:

42 - name: device_ids

43 type: list

44 description: A list of device identifiers (IDs) to retrieve statistics for.

45 required: true # device_ids are required to get device statistics

46 - name: time_range

47 type: int

48 description: Time range in days for which to gather device statistics. Defaults to 7.

49 default: 7

50

51# Arch creates a round-robin load balancing between different endpoints, managed via the cluster subsystem.

52endpoints:

53 app_server:

54 # value could be ip address or a hostname with port

55 # this could also be a list of endpoints for load balancing

56 # for example endpoint: [ ip1:port, ip2:port ]

57 endpoint: host.docker.internal:18083

58 # max time to wait for a connection to be established

59 connect_timeout: 0.005s