Overview

Arch is a smart edge and AI gateway for AI agents - one that is natively designed to handle and process prompts, not just network traffic.

Built by contributors to the widely adopted Envoy Proxy, Arch handles the pesky low-level work in building agentic apps — like applying guardrails, clarifying vague user input, routing prompts to the right agent, and unifying access to any LLM. It’s a protocol-friendly and framework-agnostic infrastructure layer designed to help you build and ship agentic apps faster.

In this documentation, you will learn how to quickly set up Arch to trigger API calls via prompts, apply prompt guardrails without writing any application-level logic, simplify the interaction with upstream LLMs, and improve observability all while simplifying your application development process.

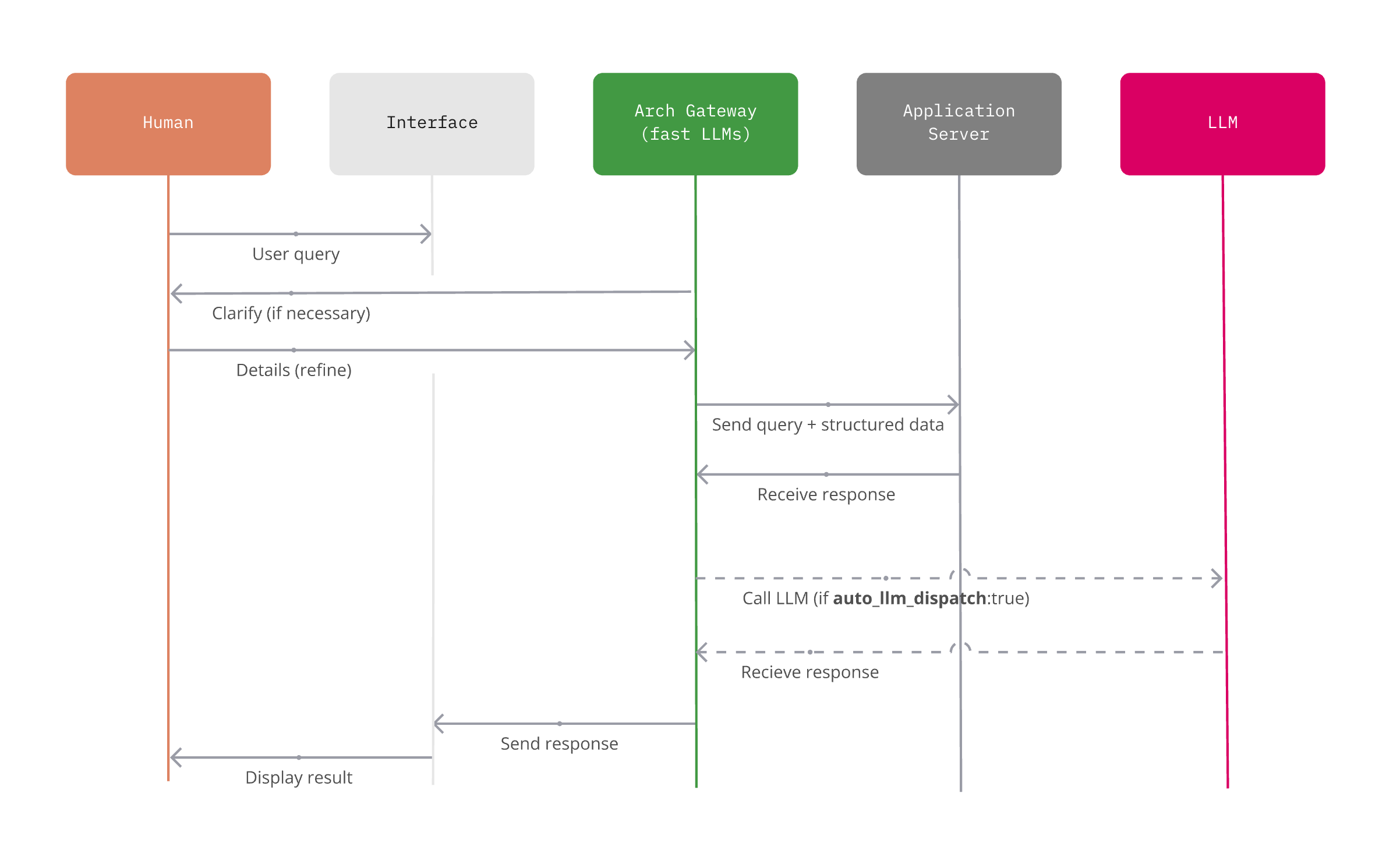

High-level network flow of where Arch Gateway sits in your agentic stack. Designed for both ingress and egress prompt traffic.

Get Started

This section introduces you to Arch and helps you get set up quickly:

Overview of Arch and Doc navigation

Explore Arch’s features and developer workflow

Learn how to quickly set up and integrate

Concepts

Deep dive into essential ideas and mechanisms behind Arch:

Learn about the technology stack

Explore Arch’s LLM integration options

Understand how Arch handles prompts

Guides

Step-by-step tutorials for practical Arch use cases and scenarios:

Instructions on securing and validating prompts

A guide to effective function calling

Learn to monitor and troubleshoot Arch

Build with Arch

For developers extending and customizing Arch for specialized needs:

Discover how to create and manage custom agents within Arch

Integrate RAG for knowledge-driven responses